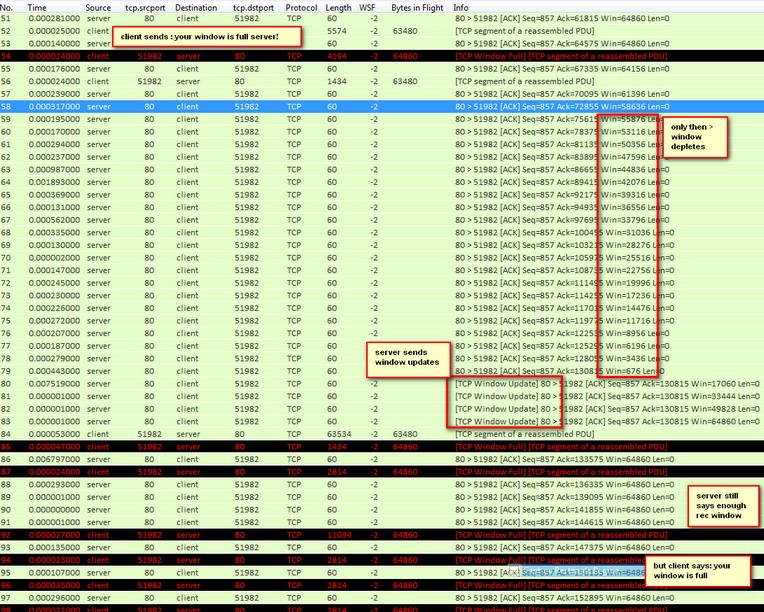

Hi all, i've got a trace where it looks like a client tells the server it's receive window is full... Then the server backs of and ACKs all outstanding/received data (then we see it's rec window deplete) and then sends a window update to the client to start sending again. The client sends data quickly thinks the rec window of the server is full again, throttles back but server (again!) thinks it's receive window is still free... Then we see Window Full statements of the client to the server and ACKs from the server stating the window is free.. What wondrous application triggers this behavior? (it's a share-point server..)??

asked 03 Jun '13, 00:17 Marc edited 03 Jun '13, 00:33 |

2 Answers:

The "Window Full" message is not something the client sends, it is a diagnosis Wireshark made and tagged the frame with. When a receiver can't take more data it will reduce it's advertised Window Size to zero (once again diagnosed by Wireshark, which will tag the frame "Zero Window"). What will happen when you see a "Window Full" diagnosis is that the sender has pushed as much data to the line as it is allowed to, meaning that it has send out as many bytes in total as the client told it to be able to receive. That usually happens when you've got a fast connection with higher latency and indicates that the receiver's window size is too small to allow full speed transfers. Because as soon as the window is full the sender needs to stop sending further data until acknowledges come in. In you case the receiver (in this case the "server") is almost getting into a "I can't take more data" situation in frame 79 (Window very close to 0) before recovering in frame 81, but that costs you about 7 milliseconds in delay. Those do not hurt much if this situation occurs only once in a while, but if the transfer is doing this kind of thing all the time even those small delays will add up to a long wait. answered 03 Jun '13, 00:46 Jasper ♦♦ edited 03 Jun '13, 02:18 |

Keep in mind that window-full situations can be and actually are absolutely normal if (!!) you're capturing near to the sending side, which in this case seems to be true as well if I assume the timings in your screenshot as delta times correctly. As Jasper stated, if there is no performance problem with the total speed of the upload -> window-full messages can be ignored if explainable due to lower bandwidth somewhere on the path to the destination e.g. Client sending over 100MB/s -> routed over WAN 16MB/s to -> Server. This is a typical situation where packets are "in flight" residing at various infrastructure buffers and "dropping" out at the server's location steadily. answered 03 Jun '13, 02:24 Landi Well..as "the path" from client to server is always full-duplex > 100 Mbit with ample bandwidth to spare and the SYN-SYN/ACK latency (ie without server processing) is only 0.005503 seconds it must be process latency by the server then.. And yes i was thinking about some device buffering chunks near the end at first...Are VM's known to buffer in such a fashion? (03 Jun '13, 23:33) Marc |

Hi Jasper, thanks for making that distinction.. (it's wireshark that tells us the window is full condition exists) and yes, it does happen a lot. Different clients have the same issues with that server. It will affect throughput too right?

Yes, it will, it's basically a stop-and-go situation. The sender sends with full speed until it has to stop to wait for acknowledgements coming in. Then it sends again with full speed and has to wait again. And so on.

You could try to either increase the receiving window size on your receiver to allow the sender to send data for a longer period of time, or make them process the incoming data faster. The latter of which is usually not that easy because you need to either optimize their application code or replace the hardware with something faster.

Updated: replaced client/server with sender/receiver to avoid confusion

Ah, it's the client sending all the data to the server (upload to sharepoint), so i'll have a closer look at the server's specs, cheers Jasper!

Correct, sorry, I got mixed up with client/server labeling in my last comment, so I fixed it.