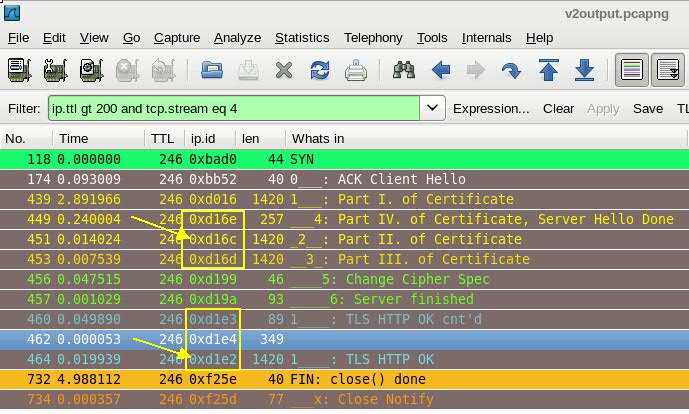

I’m currently having a problem with one particular website. The load times just to view the main page can take upwards to 20 seconds. To see what was taking so long I did a packet capture and found many retransmissions and dup ack (seems to make up the majority of the capture). The thing is it only seems to be happening to our office and the website administrator reports no problems from any other customers, this seems like a true statement. Doing the same packet capture from home I receive no errors and the website loads on an average of 2 – 3 seconds. I’ve also used websites that test load times from different geographical locations and given similar results. I’ve also done a tracert from home and office and the only difference in hops are the first couple, after the first couple hops it’s the exact same. The difference in the first hops is I have residential service where the office has business class service. We have BGP setup with two different service providers. Forcing the connection over either ISP doesn’t produce any different results. This capture is from a client PC going to the website. http://cloudshark.org/captures/e7a9522b9b91 I'm thinking it's how the packets are being routed from the website to our office but this is me just guessing. Is there anything in the packet capture that I’m missing? Or something I can do to narrow the problem down a little more? asked 01 Jul '13, 06:43 Aziz edited 01 Jul '13, 07:00 |

3 Answers:

There are actually not that many lost packets as wireshark makes us believe. A lot of packets are arriving out of order because full MSS size packets get delayed by 14-19ms whereas small packets seem to arrive without any additional delay. answered 03 Jul '13, 01:51 mrEEde2 |

I guess you anonymized the trace? The MAC addresses make no sense and the IP addresses look like you've hashed them. It sure looks like you've got tons of packet loss; right at the start you have 3 packets lost that have to be retransmitted in frames 10, 14 and 12, and the one in frame 10 looks like it is RTO based (because of a delta time of almost 3 seconds). No surprise that loading the page takes forever; you should try to find the spot where the packets are lost (if you can) by moving the capture point closer to the server until you have a location. answered 01 Jul '13, 06:57 Jasper ♦♦ edited 01 Jul '13, 06:58 |

as there are a lot of 'lost packets' in the capture file and you ruled out the ISPs and you see the same problem with other sites, what remains?

I would start to look at the the internet router and/or firewall. Maybe one of those drops packets. A common problem is a mismatch in speed and duplex setting of the involved links. Please check those first. Try to capture traffic near to the client and in parallel in front of the internet router (internal/external side) and/or the internet firewall (internal/external side) and then compare the two capture files. Regards answered 01 Jul '13, 22:21 Kurt Knochner ♦ |

This can be observed throughout the trace. The really 'lost' packets seem to be all full-mss (tcp.len==1380) packets so I wonder whether a 'traffic-shaper' is involved here.

This can be observed throughout the trace. The really 'lost' packets seem to be all full-mss (tcp.len==1380) packets so I wonder whether a 'traffic-shaper' is involved here.

@Aziz: Your cloudshark trace contains seemingly unanonymized information like public IP addresses and the Certificates indicating the name of your company/target company. I removed the link to the cloudshark trace.

If you are sure this information is ok to be spread, feel free to edit your post and re-insert the link.

Thank you for double checking. I did change the mac and IP addresses and I'm OK with people knowing the website.

@Landi FYI, removing a link from a page here does not really delete it, it can always be seen in the revisions of the page.

@SYN-bit: THX and good 2 know - so how to handle next time ? ;)

@Landi: I don't see a way of editing (or deleting) a question permanently without the original content being available though revisions (or old link).