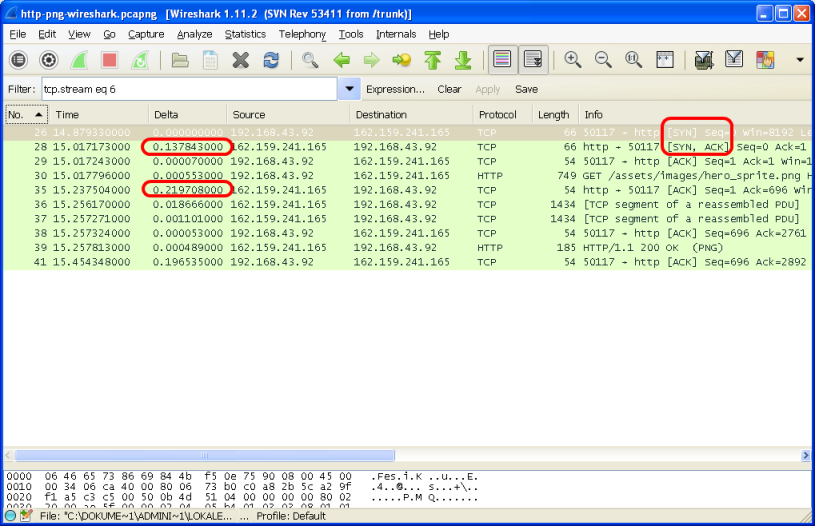

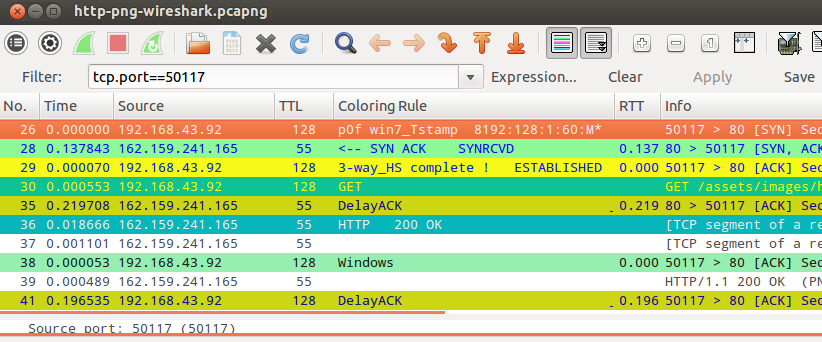

Hi, I'm trying to understand the time breakdown for a simple HTTP conversation. I've Shared capture file http-png-wireshark.pcapng, uploaded at: https://drive.google.com/file/d/0BwRchYLsDMZiRE5wakNQVmpkODQ/edit?usp=sharing Please select the first filter: (ip.addr eq 192.168.43.92 and ip.addr eq 162.159.241.165) and (tcp.port eq 50117 and tcp.port eq 80) The HTTP request is: http://www.wireshark.org/assets/images/hero_sprite.png Frame 30 is HTTP GET request. Please explain the time spent between: Frame 30->35 (219ms) - see the DeltaConv column Frame 35->36 (18ms) Frame 39-41 (196ms) Thanks, Kapil asked 17 Feb '14, 01:53 kapilok |

2 Answers:

That's the RTT (Round-Tip-Time) of the line (0.137843 seconds - see delta between frame 26 (SYN) and 28 (SYN-ACK)) and the time the server OS needed to create the ACK (0.081865s == 0.219708s - 0.137843s). So, the delta between the GET and the ACK is actually just 0.081865s. That's quite O.K. and it obviously depends on the load on the server and/or network components.

Regards answered 17 Feb '14, 04:17 Kurt Knochner ♦ edited 17 Feb '14, 04:22 |

Both hosts are delaying acknowledgements by 200ms. This is intentional and usually nothing to be worried about. both sides have nothing more to send in your conversation... answered 17 Feb '14, 02:38 mrEEde |

The ACK in 35 is just an ACK, zero data length, so you might think it would be just as quick as the SYN-ACK. But it could be that the server delays the ack until the response is ready. The first byte of response data is packet 36.

If you are analysing server response time, it is also useful to differentiate the Time to First Byte (TTFB) with Time to Last Byte (TTLB). The server is "done" usually when it has started responding and sent the first byte - the client won't do much with the data until it receives the last byte.

Thanks guys, but still not very clear. Please correct my assertions below, if they are false:

Thanks, Kapil

1.) No. It's the sum of

deltaSend + deltaProcess + deltaReceive = deltaTotal

where

Only deltaProcess is the time of server-side processing

Look at is this way:

So: deltaProcess = deltaTotal - 2 * RTT/2 = deltaTotal - RTT (see my answer)

2.) actually, the 18.6 ms are just the delta of the ACK (frame #35) and the first data fame (frame #36) sent by the server. That’s another aspect of the processing time within the server. You cannot determine from a capture file on the client, why it took a certain amount of time, as most of that time is spent in the TCP stack of the server (not considering varying delay on the line, as that cannot be detected with only one capture point).

3.a.) that’s the time it took the server to send the first data frame after the ACK. The server software writes x bytes (> 1500) to the local TCP socket. The TCP stack splits that into several TCP frames. It took the TCP stack 18.6 ms to create and send that first data frame, following the ACK (not considering varying delay on the line, as that cannot be detected with only one capture point).

3.b.) It’s less time, because the splitting operation took place in the TCP stack of the server. Apparently it took only 1.1 ms to do that. The size of the frame does not matter here, as the RTT is the same for all frames. So, the time difference/delta you see in the capture file on the client is exactly the same delta you would see in a server capture file or within the TCP stack.

4.) That’s probably delayed ACK on the client.