In the following question, @hoangsonk49 claims that he is running tshark continuously for 6 months without getting any memory usage problems, as predicted by the Wiki. Question:

Last comment/answer of @hoangsonk49:

Presumably he is using the following command:

My understanding of the dissection engine is the following: Running tshark on a link without any filter (I'm waiting for a comment of @hoangsonk49 on that issue), he should run into a memory problem sooner or later, as almost every dissector creates at least an entry in the conversation hash tables. Some dissectors also add additional data to a conversation (e.g. HTTP). So, the hash table will keep growing as long as tshark is running. Furthermore, there should be other data structures as well in certain dissectors, which would increase the memory usage even more. At least that’s my understanding of the dissection engine. So, if he does not see any increase in memory usage after running tshark for 6 months (just my interpretation of his comments), my understanding of the dissection engine might be wrong. Any idea why tshark does not crash with an out of memory error after running it continuously for 6 months? Is there anything in tshark that clears ‘old data structures’ if it is running in the way described above? I was not able to find such a thing in the code!?! Thanks! asked 22 Jun ‘14, 08:25 Kurt Knochner ♦ edited 22 Jun ‘14, 08:47 |

3 Answers:

Either he's modified the source code directly (in a non-trivial way) or he's seeing very little traffic. Or, potentially, he's running it via a system like upstart or systemd which automatically restarts crashed processes, in which case it does restart every couple of days he's just never noticed. Tangentially: I had a hack at one point of just a couple of lines which would wipe out all state after each packet, letting tshark run in "stateless" mode but I've lost it and I don't know if it ever worked all that well in the first place. answered 22 Jun '14, 09:18 eapache |

Maybe the traffic he's capturing doesn't have the usual memory creation issues. He said in his post he was looking at CAMEL protocol, which I guess means he's capturing M3UA/SCTP (right?)... and a quick peek at SCTP's dissector code doesn't show it creating conversations like UDP and TCP does. So if all he's capturing is traffic between two SS7 systems or something similar, maybe he just doesn't have the type of packet traffic that would have the issues. answered 22 Jun '14, 10:23 Hadriel

that's what I thought as well, so I asked him if he pre-filtered the traffic, as that would explain it partly. I'm waiting for an answer. (22 Jun '14, 10:42) Kurt Knochner ♦ Hi all, here are my answers for Kurt 's questions:

At the beginning, I saw an increased memory used of tshark. It reached 10% of 8 GB RAM in one hour and from that moment it works around 15% and I don't see any other increment.

YES, I'm pretty sure because it is the core process of our service. Our engineers check process and log files 3 times everyday, otherwise, if there 's any crash during running,the warning system should send an SMS to all of us automatically. It has the same PID and not able to re-start by itself (the source code was changed to process message but it is still running in the same way with the normal tshark).

NO. In my program, I do run some filters but that happens after decoding messages. I ignore the messages which have decoded values of some parameters do not match our objective. And here are my answers for some comments above:

As you can see my tshark command, the size of each log file is limited by ~650 Mb. This size is reached in about 12 minutes. Also,this is 1/3 traffic of the telco which has about 35 million subscribers, and tshark has to capture a hundred of calls every second. So I think, it is not quite little traffic:-)

YES, You are right. I run tshark on IN network which has ONLY CAMEL, SCTP message... No other type of packet. Maybe this is the answer for my case :-) (23 Jun '14, 00:12) hoangsonk49

Maybe that explains it, as @Hadriel said: The SCTP dissector does not create a conversation table entry, however it adds other data structures on its own. Hm... BTW: Are there many different IP addresses or just a few IP addresses? (23 Jun '14, 03:06) Kurt Knochner ♦

I see many different IP addresses (at least, more than 15 addresses). Some information: When a *.pcap is created, we dissect this file in real-time and when a file is completely decoded and switch to other file, we are able to delete this file without any warning or error. So i think when a pcap was decoded, there is no connection between this file and other data, otherwise, it should not be able to be deleted. (23 Jun '14, 18:53) hoangsonk49 Hi Kurt, we are going to deploy a service which also captures and analyzes the message in IN network. It is similar to the previous service but in this case, the IN network contains only UDP (not CAMEL like the previous telco). According to my understanding, it definitely faces the problem of the memory because of the hashtable in

Thanks! (05 Aug '14, 23:46) hoangsonk49

that depends on the dissector that gets called on the UDP frame, so what if the protocol on top of UDP? (06 Aug '14, 01:32) Kurt Knochner ♦

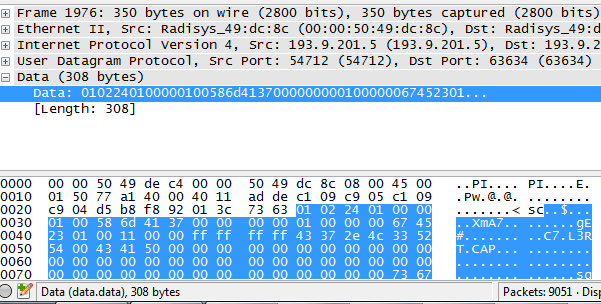

It is data.data. In this case, I'm afraid that changing the code of

(06 Aug '14, 04:01) hoangsonk49 Is that one long lasting conversation or many short conversations? (06 Aug '14, 06:01) Kurt Knochner ♦ that is one long lasting conversation. This is the data to be encoded. (06 Aug '14, 07:55) hoangsonk49

Then there will be only one entry in the hash table, or just a few if tshark runs for several days/weeks/months. That should be no problem at all. (06 Aug '14, 08:15) Kurt Knochner ♦ maybe I have a misunderstanding. This is one of a thousand messages. Each message has one conversation like that. So at anytime, we capture many data.data to analyze. My question is: can I clear the hashtable or just remove the set up of conversation from the source code ? (06 Aug '14, 08:41) hoangsonk49

well, yes you 'can', but I don't know if there would be any side effects, as I have not yet checked the code, and I'm not sure if I will find the time during the next couple of days. (06 Aug '14, 11:51) Kurt Knochner ♦ You might be interested by https://blog.wireshark.org/2014/07/to-infinity-and-beyond-capturing-forever-with-tshark/ (06 Aug '14, 13:18) Pascal Quantin showing 5 of 13 show 8 more comments |

I've used Tshark numerous times, but never for a period of 6 months. But I have used the following on a WinTel machine for anywhere from 10 ~ 20 days without any errors. tshark -i 3 -w MyCapture.pcap -s 80 -b filesize:1000000 I dump all my files in 1GB size. Then used Editcap to weed out only the portions I want (usually within 5 ~ 30 minutes prior to and/or after the problem to reduce the 1GB file to a more usable size. I've had 192GB of data over 7 days and 233GB of data over 20 days, but never experienced any such error. Cheers, answered 15 Apr '15, 06:17 Walter Benton Your snaplength of 80 ( It's possible that they may not be able to add state to the hash tables or other data they maintain. (15 Apr '15, 06:50) grahamb ♦ |

hm.. that's actually a possible explanation, and that's why I asked him to confirm that it's the same process (same PID) running for 6 months. I'm waiting for an answer.

I dug up my hack code and cleaned it up a bit and submitted it as a Work-In-Progress for code review: https://code.wireshark.org/review/2559/