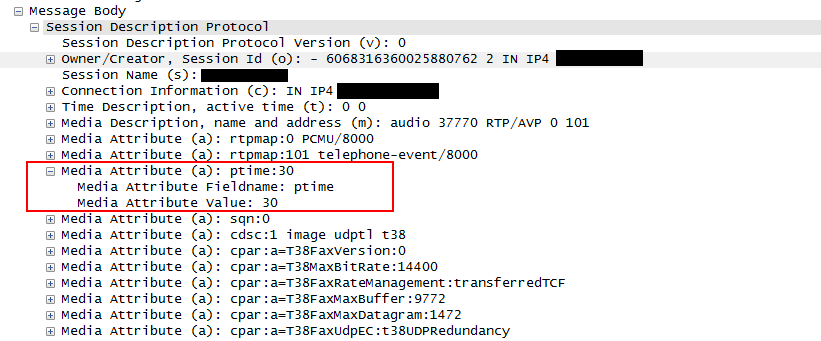

How do I create a filter to find the packetization interval for a RTP packet? I can figure it out manually by looking at the size of the rtp payload and the codec used. For example, A 160 byte payload of G.711 has a packetization interval of 20 ms, 240 bytes of G711 has a packetization interval of 30 ms. Also, the packetization interval can be found as a media attribute in the SIP/SDP INVITE packet, which will usually be "a=ptime:30" or "a=ptime:20".

asked 09 Oct '14, 16:43 gsalomon |

2 Answers:

I'm not sure exactly what you want to do... a filter doesn't "find" a value, it filters the packets shown based on the values or fields you tell it to filter for.

answered 10 Oct '14, 08:58 Hadriel |

You can't. Well, sorta, but it depends. Packetizing takes place in the RTP endpoint. That's were a timer runs to collect samples into an RTP packet and set its timestamp. But, depending on the actual codec used, this may or may not lead to an RTP packet transmission. And it's only the transmitted RTP packets we can analyze. So, assuming there's a constant packet stream (no VAD, etc) you could pick out the timestamps of subsequent RTP packets and do the math. But that requires calculation spanning multiple packets. Oke, MATE may be helpful here. Another one is already calculated: delta from previously displayed packet. If you setup a display filter just showing the RTP packets you're interested in then you can use this field. Still it's only an approximation; it's the delta of packet reception, which is somewhat correlated to packetization. So, the real answer is in the control protocol, as you have already stated. answered 10 Oct '14, 08:59 Jaap ♦ |