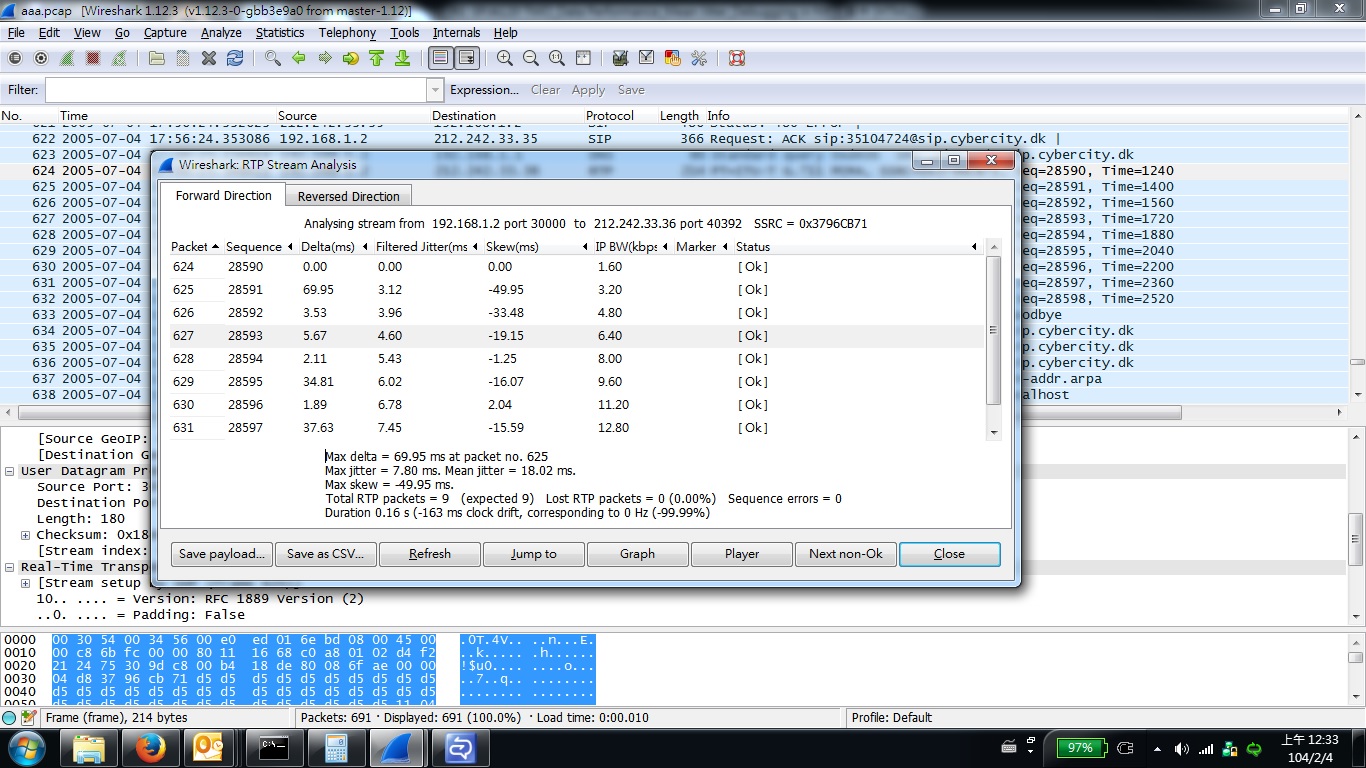

I am trying to learn about how are the jitter and mean jitter calculated. I downloaded an example pcap file from http://wiki.wireshark.org/SampleCaptures#head-6f6128a524888c86ee322aa7cbf0d7b7a8fdf353 named aaa.pcap. Actually, this pcap file was used as the example on wiki.wireshark RTP_statistics section to calculate the jitter. When I used the RTP Stream Analysis, it will show some information about every RTP packet including jitter. My question is: Max jitter is 7.80ms, why the Mean jitter is 18.02ms? How does Mean jitter = 18.02ms come from?

asked 03 Feb '15, 09:14 Antibes edited 03 Feb '15, 09:21 |

One Answer:

to my understanding, the mean value should not be larger than the max value. Looks like a bug to me. Please file a bug report at https://bugs.wireshark.org Regards answered 09 Feb '15, 15:32 Kurt Knochner ♦ |