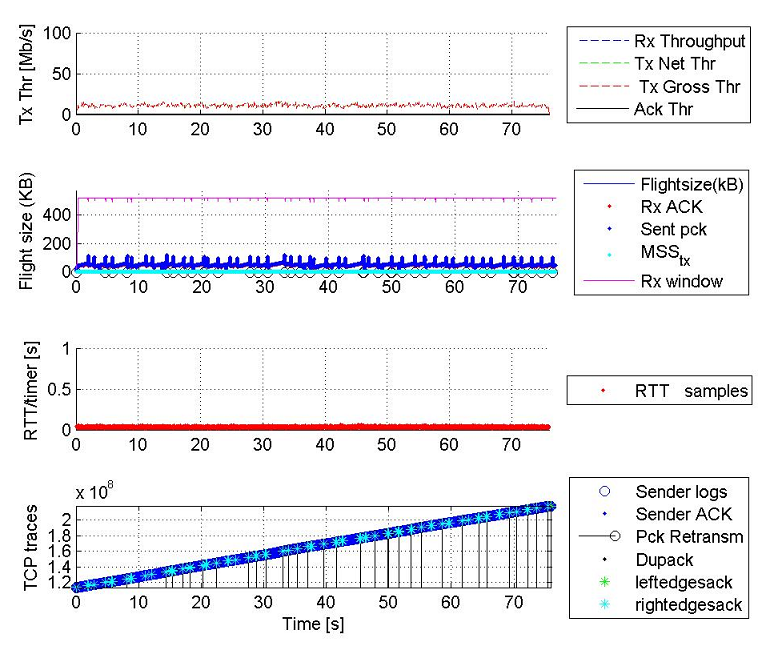

Hi to all, I am curently working on troubleshooting e2e issues on LTE. So I use wireshark logs for analysis. I am quete new in this, so all the help would be welcome. I have issues with limiting DL throughput. RTT is ok, so there is not any additional delays, but flight size drops happen all the time. I use some matlab tool for this analysis to generate some graphs (see the figure below to see the situation). This is the receiver side. It seems that there are some IP loss, but having a deeper look in the logs in wireshark it seems that IP loss is not the real cause of the low flight size which limit the throughput. There are a lot of Dup ACK which couse retransmissions, but even whan there aren't retransmission, flight size still drops. Any ideas or experiance to share what is actually the problem here? I could upload the log if you are that kind to have a look at it. BR, coMI asked 21 Apr '15, 06:21 coMi edited 22 Apr '15, 05:29 |

One Answer:

I've done LTE throughput troubleshooting before, and usually this has been a buffer bloat problem. It happens when high speed links are switched/routed to lower speed links, causing the device to buffer packets (and starting to drop at some point). An indicator I have often seen is that as soon as the buffers are hit with 100 kbyte in flight you'll see packet loss, because this seems to be a common buffer size for network devices (per port, I guess). So the buffer fills up to the maximum and has to drop because more and more is coming in. It can go as bad as seeing 1/10th of the theoretical maximum throughput, caused by the delay until the retransmission has a chance to get through the clogged device. The cause for this is that the receiving device advertises a TCP window size that is too large, resulting in the sender pushing out tons of data which overloads the infrastructure (some LTE device drivers seem to use hard coded 4MB TCP window sizes, which is absolutely deadly in heterogeneous networks). answered 21 Apr '15, 07:34 Jasper ♦♦ edited 21 Apr '15, 07:37 |

First of all, thanks Jasper, I appreciate your help. The first part of the the answer makes sense. Probably the bottleneck is somewhere in transport part of the network, so there are some limiting links that doesn't let flighsize to grow. One more question. I have filtered that duplicated ACKs with high counter numbers (over 70, so packet loss for sure) are actually connected with the specific source port numbers, or this is something Does this tell us that ports are actually bottleneck and how thay can be found in the network? I am a radio engineer, so I quite new in transport troubleshooting of the network.

Thanks, coMi

I can't really tell from the information you have given, but what I've seen many times is UL latency, caused by delays in getting UL grants from the scheduler in the eNodeB. If you can get a MAC/RLC/PDCP log (at either end of the link), or just the IP traffic at or beyond the eNodeB, you could check the TCP ACKs being sent in the uplink. If you see big bunches of them received close together in time, then check the TCP timestamps in the options (if present), and I expect you'll see they were more spread out in time. Obviously the closer to the eNodeB, the surer you can be that they originated over the air interface.

Duplicate ACKs are simply telling you that there is a gap (meaning, packet loss), and the higher the dup ack count the longer the gap existed. This means that a lot of packets arrived before the retransmission finally made it through. Most LAN networks have dup ack counts less than a dozen, but with buffer bloat the numbers usually go much higher. The highest I had on an LTE test setup suffering from massive buffer bloating was a dup ack count over 1000.

Finding ports that are overloaded is usually done by looking at the switch console. The admins should be able which port is in trouble; it's not possible to do this from packets because you often do not know which path they take on the network.