Just answering your question on Wireshark tuning to prevent packets not being captured in another answer thread, so it will not interfere with the interpretation of the packets in the other answer thread.

"Can be the other reason?" Maybe the tracing tool could not keep up with the high packet rate tracing unfiltered packets." I have filters to capture all packets, i have a tuning on my S.O (Linux) for the buffer on network cards and i have a raid 10. Are there other tuning for wireshark?

There is no one-size-fits-all-answer to tuning your capture setup. But in general, you will need to follow the path from the source to the disk on which the packets will be saved. Any resource in between can be a bottleneck. So check all involved resources...

- The mirror port. When doing a monitor session to a mirror port, make sure the (combined) traffic of the sources does not overload the monitor port. Spanning a 1 Gbit/s interface both RX and TX can create 2 Gbit/s of traffic is it is fully utilized in both directions. Many switches report this in the interface statistics, so you can clear the counters before starting your capture and then read the discards after finishing the capture. Experiment in a test setup first to get to know your switch statistics in different scenarios.

- The capturing NIC and CPU. Does you Capturing NIC have enough buffer space for spikes in traffic. If the CPU is overloaded and can not respond to the IRQs of the NIC, the NIC must drop packets. So monitor your CPU usage and the counters of your NIC (if available under the OS being used).

- The disks. When libpcap/winpcap receives the frames, it needs to write them to disk. If your disk is not fast enough, packets will be dropped. If there are packets bursts, increasing the buffer size in dumpcap/tshark/wireshark might help but if there is a custant flow of traffic that the disks can't keep up with, then increasing the buffer size will not matter. You will need to filter more or slice packets (if you don't care about higher layer protocol data). Or you need to stripe disks (as you are already doing, but why RAID10, why not use RAID0 with 4 disks so you have quadrupple write speeds whicl capturing). The dropped packets counter in pcapng formatted files indicate the amount of packets libpcap/winpcap was not able to save to disk.

answered 27 Apr '15, 01:32

SYN-bit ♦♦

17.1k●9●57●245

accept rate: 20%

Thanks you for your time mrEEde

1) I'm thinking that the original interface not lost the packet and so the port mirroring port by one reason (example performance) not send the packet. Can be the other reason?

2) I mention this because the switch is having problems, but I need to show evidence the capture, there are some filters that allow me to do that? I am very complicated

3) And my other question if the ACK is = Number Segment + Packet lenght in bytes why is the reason for duplicated Number segment? i'm confusing how to work tcp

"1) I'm thinking that the original interface not lost the packet and so the port mirroring port by one reason (example performance) not send the packet."

The receiver ACKs the missing packet, so it was never discarded anywhere.

"Can be the other reason?" Maybe the tracing tool could not keep up with the high packet rate tracing unfiltered packets.

2) I mention this because the switch is having problems, but I need to show evidence the capture, there are some filters that allow me to do that? I am very complicated

Real retransmissions can be found using tcp.analyis.retransmission

3) And my other question if the ACK is = Number Segment + Packet lenght in bytes why is the reason for duplicated Number segment?

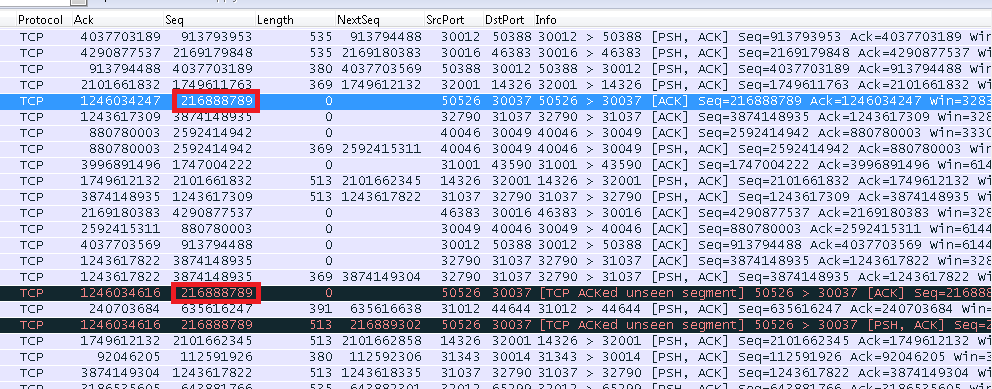

Only the last segment with sequence number 216888789 is carrying payload. The first 2 have a tcp.len==0 so they are just ACKing incoming packets.

Thanks you mrEEde.

1) About "The receiver ACKs the missing packet, so it was never discarded anywhere" is it possible the switch device dropped this packet on port mirroring interface? my company said the switch device have dropped packets in the counter but i need to stay sure that is my wireshark or can be the port mirroring

3) "Can be the other reason?" Maybe the tracing tool could not keep up with the high packet rate tracing unfiltered packets." I have filters to capture all packets, i have a tuning on my S.O (Linux) for the buffer on network cards and i have a raid 10. Are there other tuning for wireshark?

3) What about of the last tcp acked unseen segment? i can't understand that case

Thanks again for your time i'm very complicated with this case because I need to know all for notify to my company

"my company said the switch device have dropped packets in the counter"

Well, if the switch is known to drop packets, chances are that it also drops packet on the mirroring port which would then explain why they don't show up in the trace causing 'tcp acked unseen segment' messages.

So the first goal would be to fix the 'increasing dropped packets counters'.

In the last "tcp acked unseen segment" in the image i dont understand the reason, because before have the packet. It's my las question

It has the same reason as the "tcp acked unseen segment" before. (mEEde told you) Because the first packet was an ACK only and this one contains data. The packet between this two packets belongs to anaother session.

ok. The first packet marked for me (blue) is ok The second packet (black) it's for missing segment tcp.seq==1246034247 tcp.len==369 The third packet (black) i don't see the problem because the second packet (black) have the ACK only for this packet

Thanks a lot

Ok. Of course there is no reason. But the second has the same ACK Number as the first black packet, which is correct, because ACK number says what you want to hear next. And because the two packets have the same ACK number Wireshark marks the packets for the same reason. In other words both packets points to the same missing Segment.

"The third packet (black) i don't see the problem because the second packet (black) have the ACK only for this packet" The third packet carries 513 bytes of payload in the reverse direction so it's the first time in this trace snippet that port 50526 is actually sending data.