Hi, Can you please help me to understand why there is an extra ACK (with len=0) from server (CentosOS 6.6 - linux 2.6.32) during the TCP socket communication? Is it possible to avoid the extra ACK? (avoid unnecessary overhead)? Our scenario: small packets communication (GSM data) looking at tcp delayed acknowledgement, Nagle's algorithm etc...not getting the right solution. Thanks, Saty asked 23 Mar '16, 07:06 saty |

One Answer:

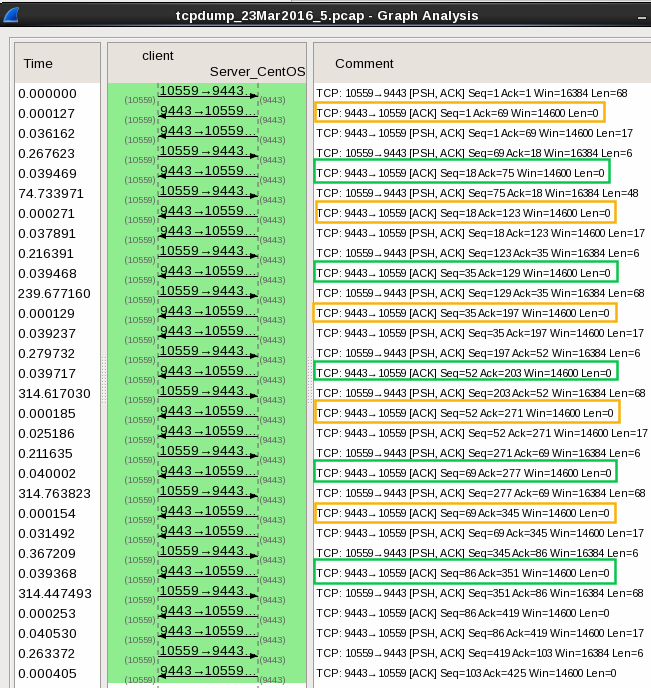

I think the problem is your server sending immediate ACKs at times when it could have just waited for the data to flow in the reverse direction a few ms later.(yellow) Regards Matthias answered 24 Mar '16, 22:55 mrEEde yes, how can we avoid this immediate ACK from server? what changes will help in this case? is it server/OS level or socket program level change? thank you (25 Mar '16, 01:28) saty here is the output of /proc/net/tcp (25 Mar ‘16, 02:29) saty 14: 0100007F:1F45 00000000:0000 0A 00000000:00000000 00:00000000 00000000 0 0 783653097 1 ffff880037a80780 99 0 0 10 -1 (25 Mar ‘16, 02:30) saty Just out of curiosity, why don’t you want this ack? From my point of view it isn’t so bad. (25 Mar ‘16, 03:11) Christian_R By avoiding these immediate ACKS, if possible, we want to reduce unnecessary overhead on GSM data usage and save cost/bandwidth. This is a test case with one client, just think if there are many thousands of clients, many such ACKs (overhead), and how much can be saved. (25 Mar ‘16, 03:32) saty Which parts of the software can you modify or configure? Your application, the TCP stack behaviour, or none of the two? On both the client and server or nowhere at all? What I can see is that the client requests something, and the server sends the ACK to it before it has assembled the response. This is what @mrEEde has drawn your attention to - if it normally takes tens of milliseconds to provide the response to the request, the server application may delay sending the ACK for the received request until it has the data of the response ready, so that it could send the ACK for the request in the same packet as the response. Currently, it looks as if it orders the tcp stack not to wait. Another thing is that the client sends some data (6 bytes) back to the server as soon as it receives the server’s response. After receiving these data, the server sends the immediate ACK again. The question is whether your application protocol needs to send those 6 bytes at all, i.e. whether these data are meaningful or whether it is just some kind of application-level ACK which would thus be redundant (useless) if you use a transport protocol with guaranteed delivery, such as TCP. If you are not able to change the behaviour of the application software, I’m afraid you have no chance to get rid of those ACK-only ( (25 Mar ‘16, 04:02) sindy This trace lasted for 21 minutes, and only 2,146 bytes were transferred (including the Ethernet, IP, and TCP headers, not just the data). Adjusting for the fact that the capture was taken on the server, and some of the actual frames transmitted on the network would have been larger due to the minimum Ethernet frame size, 2,338 bytes would have been transferred. If all the zero-length ACKs could be eliminated, 768 bytes would be saved, or 0.61 bytes per second. While that is one-third of the total, I’m not sure I’d spend much time trying to change what is essentially normal TCP behavior in order to save 0.61 bytes per second per device. The only reason it’s such a high fraction of the total is that so little data is being sent. The largest packet in this trace had only 68 bytes of data. There were only 526 data bytes transferred in the whole 21 minutes. There is more or less the same amount of overhead in a packet, regardless of whether it contains no data, 100 bytes of data, or 1,460 bytes of data. (25 Mar ‘16, 14:19) Jim Aragon Yes I think it, too. It is better to optimize an application and not always the TCP/IP implementation. The way how the server stack works in these case is for a lot of use cases, from my point of view, a really smart implementation. (25 Mar ‘16, 14:30) Christian_R currently using CentosOS 6.6 - linux 2.6.32 on Server, Is there a way to reduce ACK overhead by tcp stack tuning on server level? some parameters we are looking at “tcp_delayack”, quickack etc is not available in sysctl file on this server OS version. Also looking at some socket program level parameters like tcp_cork etc not available. (28 Mar ‘16, 04:50) saty I common way how people handle this: They use UDP as Layer4 protocol and implement the needed Transportation Control mechanismen by themself. I suggest you should think about that solution. Because you can save a lot of Bits if you are using UDP instead of TCP. Otherwise I recommend, that you ask in a linux or CentOS forum about that parameter. (28 Mar ‘16, 05:20) Christian_R i have raised this Question in “linuxquestions.org” forum now. http://www.linuxquestions.org/questions/showthread.php?p=5522417#post5522417 (28 Mar ‘16, 05:52) saty yes, UDP is a good solution to avoid ACK’s (overheads etc) however having implemented on TCP, looking for a solution (OS level or Socket level) to avoid immediate ACKs, if possible then life would be happier this way. (28 Mar ‘16, 05:56) saty can anyone suggest how to avoid immediate ACK’s from server?? (29 Mar ‘16, 03:41) saty showing 5 of 13 show 8 more comments |

You'll need to provide some sort of evidence, ideally a capture file, to illustrate your issue. You haven't told us when this "spurious" ACK comes within the TCP conversation.

how can i upload capture file?

You cannot (and no one else can either). Publish it at cloudshark, google drive, one drive, or anywhere else, login-free, and edit your Question with a link to it.

uploaded capture file at https://www.cloudshark.org/captures/3319789a4eb5 (192.168.xx.xx is a server and 62.140.xx.xx is a client)

how can we avoid "ACK" having len=0 to reduce overhead on GSM data usage.