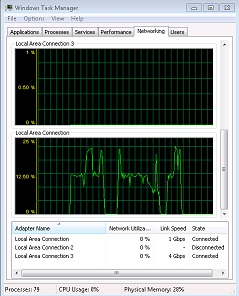

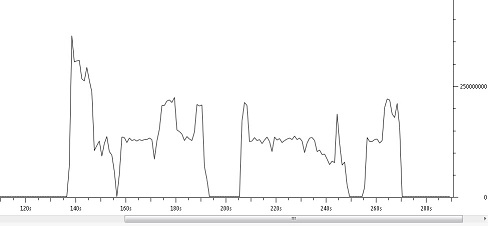

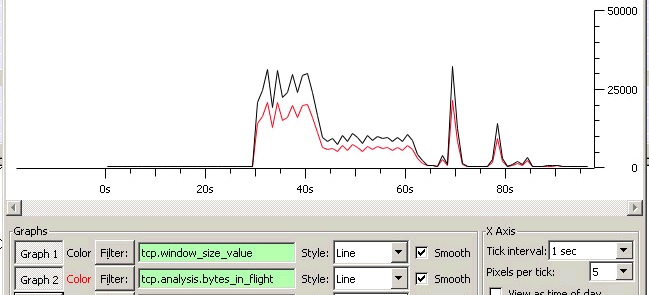

I am troubleshooting a slow file transfer over SMB from a Windows share to a VM running FreeBSD 10.3 FreeBSD 192.168.20.225, Windows 192.168.20.100 Is there anything in this 3 way handshake between the hosts that indicates a problem? (Window Size Value?) https://www.cloudshark.org/captures/2ade37ce25dd here is a graph of a 2GB file transfer from a Windows computer hosting the file

here is a graph of what whireshark sees

Thank you asked 13 Jul '16, 13:39 net_tech edited 13 Jul '16, 13:53 |

2 Answers:

The SMB trace suggests that the client (FreeBSD VM) is slow. Yes, it would be nice to see it with segmentation offload disabled but I'm not sure it matters in this case. The big packets are coming from the Windows machine and the traffic was captured on the Windows machine. The 7 second delay isn't from the big packets it's from the next read request from the client. The FTP trace is similar. There's a large burst of traffic at the beginning (peaking at 50,000,000 bytes/sec) but then quickly slows down to (and stabilizes) around 4,000,000 bytes/sec. I don't see much in the way of transport problems (e.g., packet loss). I don't know if the segmentation offload is hiding this--I've never worked much with TSO enabled. These two traces suggest to me that the (FreeBSD VM) client is slow and I would further theorize that it may be the filesystem that is slow. You might want to time some (large) file creations on the VM to test the theory. For example: Will create a 500 Mbyte file and tell you how long it took. Do some math and find out what the filesystem write speed is. If you get something on the order of 4 Mbytes/sec (like the FTP transfer) then that's your problem. If it's much faster then my theory's no good. Note: the above would work on Linux; I think it will work on FreeBSD too. answered 18 Jul '16, 11:25 JeffMorriss ♦ Output: (20 Jul ‘16, 08:15) net_tech So the disk is certainly fast enough… Looking again at the FTP capture I can see two things of interest:

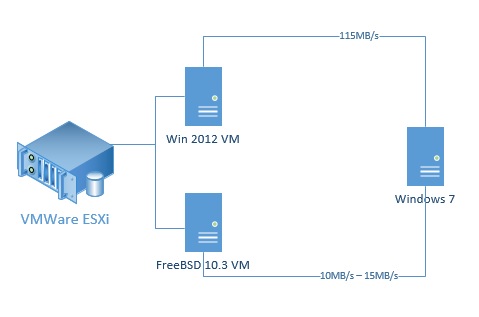

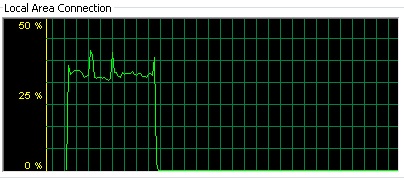

Is this (Windows) server able to send files (of this size) to other clients at higher speeds? (20 Jul ‘16, 14:17) JeffMorriss ♦ Yes, Windows 7 (acting as a server) is capable of sending at 115MB/s Here is a diagram of the current setup. VMWare server is hosting 2VMs. Windows Server 2012 and FreeBSD. Windows 7 Workstation on the right is a physical computer with a network share. Transferring a test 2GB file from a Win 7 PC to a 2012 Server VM is almost wire speed, transferring the same 2GB file from a Win 7 to a FreeBSD VM is extremely slow. Both VMs (FreeBSD and Win 2012) have identical hardware assigned to them, there is no special memory/cpu/hard drive reservations.

(13 Sep ‘16, 07:01) net_tech I spun up another Win 7 VM and enabled promiscuous mode on the vSwitch so my new Win 7 VM running Wireshark could see all traffic between the FreeBSD and Windows 7 PC. Windows 7 PC hosting the SMB share is now at 192.168.20.103, FreeBSD is at 192.168.20.225 New capture could be downloaded here (1.2GB)

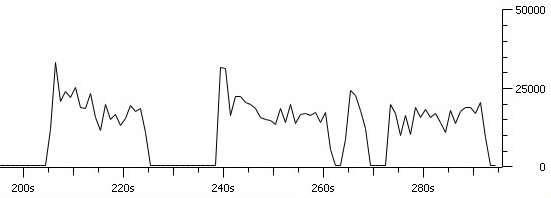

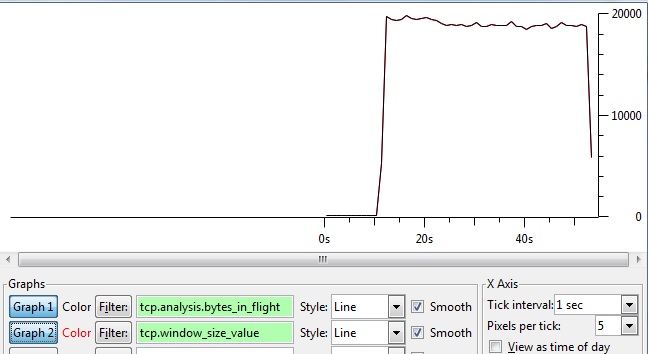

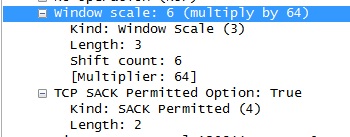

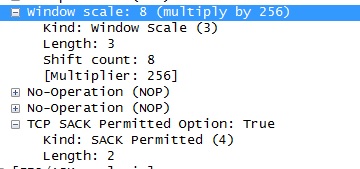

(13 Sep ‘16, 18:58) net_tech there is a 7 seconds delay around frame 368749, if it does turn out to be the file system (ZFS) problem, is there anything in the trace to support this theory? Thanks (14 Sep ‘16, 07:42) net_tech Well frame 368749 is a SMB Read Request (from FreeBSD) which is 14 seconds after the last message in that (TCP) conversation. That 14 second delay certainly appears to be due to something on the (FreeBSD) client but whether that’s the filesystem or something else is another question… (14 Sep ‘16, 13:44) JeffMorriss ♦ Looking at the throughput in the latest file your original supposition may have been correct: it appears that the Windows server is filling the client’s TCP window. The client is not setting window scaling which is limiting Windows to 62k before it has to stop and wait. (This is visible if you graph Can you enable window scaling on FreeBSD? (14 Sep ‘16, 14:41) JeffMorriss ♦ According to https://wiki.freebsd.org/SystemTuning RFC1323 support is enabled by default, but I went ahead and applied the suggested settings from this post https://slaptijack.com/system-administration/freebsd-tcp-performance-tuning. Unfortunately there was no improvement. I also experimented with another FreeBSD VM using USF. Here is the graph.

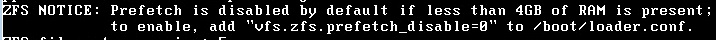

(15 Sep ‘16, 13:54) net_tech finally I bumped the ram of the FreeBSD VM from 4GB to 8GB. This is the 1st time I was able to transfer a 2GB file at half the gigabit speeds and minimal drops.

(15 Sep ‘16, 13:56) net_tech Interesting… Is FreeBSD now advertising large TCP windows (via window scaling)? At what point did it start doing that (which change did it)? The “throw memory at it” fix makes me wonder about some of the notes on the FreeBSD pages about needing to increase network memory buffers when enabling TCP window scaling… If that’s the case there may be some other config options to try. (15 Sep ‘16, 14:07) JeffMorriss ♦ no, the largest Window size value is still 1040. I want to do another test on a physical FreeBSD system. I am not using NFS, but suspect virtualization could be adding some complexity to this puzzle https://calvin.me/slow-vmware-nfs-zfs-add-zil/ (15 Sep ‘16, 19:15) net_tech 1040? In the “2gb” file I was looking at FreeBSD was advertising 64k (in both the SYN and in all the TCP ACKs). (16 Sep ‘16, 10:49) JeffMorriss ♦ sorry, it’s still 64k but this could be the answer why the performance is better with 8GB

(16 Sep ‘16, 11:45) net_tech Hmm, isn’t prefetching for optimizing (sequential) reads? Here the BSD client is reading from a remote server and writing locally. (16 Sep ‘16, 12:50) JeffMorriss ♦ you are correct, prefetching would help if I was reading from the BSD client. I thought the better result was from the memory increase, apparently it’s from UFS. To rule out virtualization, I built another BSD client on physical machine with an SSD drive and ZFS. Results are much better with no drops in the file transfer, which leaves VMware being a part of the problem.

(19 Sep ‘16, 18:05) net_tech Do you think increasing the TCP window on a BSD system will improve the throughput? Executing sysctl net.inet.tcp on a BSD system shows that rfc1323 is enabled by default.

FreeBSD 10.3 VM TCP Window

Win 2012 VM TCP Window

Thank you (19 Sep ‘16, 18:09) net_tech showing 5 of 16 show 11 more comments |

I had a look at the capture named "2gb". It is missing a lot of packets because the sniffer dropped them, not because the network dropped them. These occur at very regular intervals - not randomly. At the time period I delved into, for every 35-37ms of data captured there was then 70-74ms of data missing. This would lead me to believe that the capturing mechanism has an inherent flaw, perhaps based on the virtualisation. Nevertheless, we can find some useful information. Although we don't see the 3-way handshake for the main SMB connection, other SYN/SYN-ACK setups between the same client and server don't support Window Scaling. Therefore, we can only ever have 64KB in flight (i.e., per round trip). Put another way, we can only do one SMB request/response at a time (because each SMB requests a 64 KB data block). This may not matter in this case because the "slowness" is entirely due to the client. We are achieving a throughput of ~500 Mb/s because we are achieving only approximately 64 KB/ms. This is because the client is only making one request per 1 or 2 ms. Each request is serviced very quickly and the 64 KB is delivered from the server across the network very quickly. The client usually ACKs the server data quickly, except for the last few packets which are ACKed by the client's next SMB READ&X request packet. The time gaps between the client's request packet and the last server packet are consistently just under 0.4ms. The time from the last server packet and the client's next request packet is consistently just under 0.6ms. The time between client request packets is consistently 1ms +/- 0.2ms. The network RTT seems to be around 0.2ms, so the time gaps between client requests are not related to the network. They are time spent by the client dealing with the received data before bothering to request the next block. The large 14 second time gap is, likewise, due to the client. So your hunt is to determine why the client waits so long between requests.

I'd also be interested in why Windows Scaling doesn't appear to be enabled. The client doesn't request it in its SYN packets. It would be interesting to know if enabling it would make a difference. Happy hunting! answered 21 Feb '17, 02:04 Philst |

Just seeing the 3-way handshake's not going to help. The Wireshark graph shows the throughput bouncing all over the place and even dropping to 0 for some seconds. We'd need to see the whole capture to analyze it.

Alternatively I'd suggest looking at (and using some of the analysis techniques) described at Ronnie's excellent "analyze CIFS Traffic" presentation available on the Presentations page of the wiki.

Here is a capture of the transfer via FTP between the same hosts, which looks even worse than SMB (500MB file)

https://www.dropbox.com/s/9a3exw8u6htijii/ftp.pcapng?dl=0

tiny portion of the SMB trace Windows -> FreeBSD https://www.cloudshark.org/captures/bf42fc4b294b

Is Frame #7 an acknowledgment for Frame #6? There is a 7 seconds delay between the packets, which illustrates the drop in the graph above.

The trace is made onside host with "segmentation offload = yes". If you want to see what really is going on you have to trace outside as close as possible to the machine. Best recomendation: Use a tap.