I have a TCP communication problem between two of my servers. Every ten seconds the TCP connection is being established and then reset. The session includes Dup ACKs and Retransmissions but I couldn't locate the root cause since I don't observe any dropped packets neither. You can find the traces from both sides here and here. Please note that there are some intermediate devices in between these servers although not sure I need traces from them as well. If so please let me know so that I update the post. asked 05 Aug '16, 01:44 mert_z edited 05 Aug '16, 01:45 |

2 Answers:

Your first trace is just looking bad because of duplicate packets. Check this blog post for details: https://blog.packet-foo.com/2015/03/tcp-analysis-and-the-five-tuple/ After deduplicating the SRV1 capture, both captures look okay - a connection established, some data exchanged, the connection staying open in case more data may be requested (resulting in "Keep Alives" when there isn't), and finally a connection teardown via reset when it turns out nothing else is needed. Looks pretty normal to me. answered 05 Aug '16, 01:53 Jasper ♦♦ edited 05 Aug '16, 01:57 showing 5 of 7 show 2 more comments |

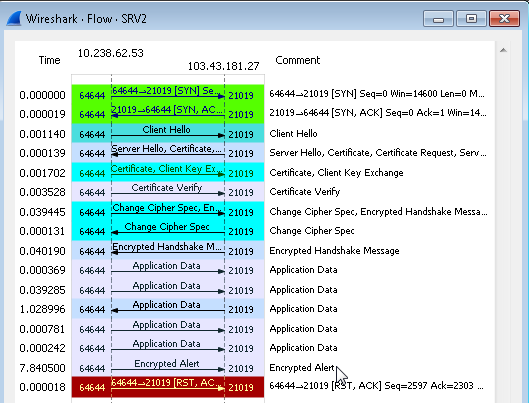

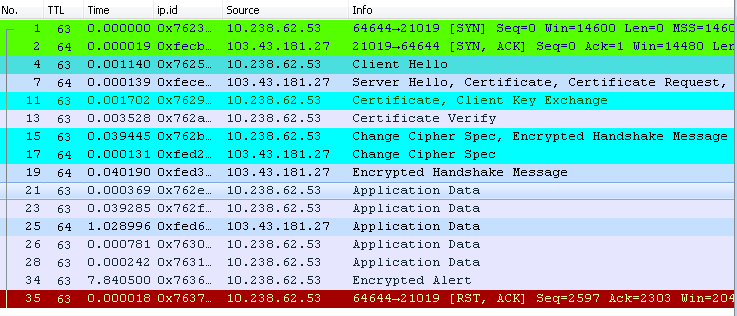

The TCP session is using TLS so you need to "Decode as" SSL The ip.id fields of the Encrypted alert and the RST packet are incremental which suggests they have been generated by the client's IP stack and not by an external device. So it is the client's application logic that decided to terminate the session. answered 05 Aug '16, 03:26 mrEEde |

Sorry, posted the wrong link. Fixed now.

In fact, the TCP session should stay up as long as the application on the server side is up. However before sending RST, application encounters an end of stream error while trying to read the data on the socket. Thus this makes me believe that the connection is already broken before application sends the RST. Is it possible that retransmissions and duplicate acks are because of high RTT? Or some intermediate device already broke the tcp session?

No, there are no retransmissions and duplicate ACKs. These are "ghosts", not real packets. If you deduplicate your trace using

editcap -d srv1.pcap srv1_dedup.pcapyou'll see that there are no real problems.Does the client or server application set a socket timeout when opening it? It usually has to, and I'm guessing that is why it is torn down after a while (by the client side with IP 10.238.62.53).

And honestly, your RTT is lightning fast - less than 230 microseconds at the handshake. Your problem is on the application/server side, not the network connection.

Thank you very much for your comments. The reason why I think the problem is on the network side is the fact that same app is working successfully on another network. That's also why RST shouldn't be the result of a timeout. One interesting thing in this scenario is the RST is seen every 9 seconds sharp. I mean app has a recovery mechanism that once the TCP connection is dropped it establishes another one very quickly, however it ends with a RST again after 9 seconds. Please note that this is not seen on the other site where TCP connections are surviving successfully. Still I'm thinking of blaming some intermediate device. Any ideas on this suspicious 9 sec duration?

A consistent RST at 9 seconds looks like a timeout value set by a human for the according socket. When an application closes a socket, the TCP stack issues a reset packet. I'd expect 10 seconds though, but it can really be any value.

Regarding an intermediate device - there seems to be only one routing hop between the two, so if you can find that device (router or firewall probably), check if it has a state timeout killing the session.

So you should try to capture on both sides simultaneously to check if the reset always comes from the opposite side - this would indicate an intermediate device tearing down the connection in both directions.

I also located a capture from an intermediate firewall on which packets of these problematic session pass through. I'm also suspecting of this firewall. However I couldn't see anything suspicious looking at its ingress and egress captures. Not sure if it helps but these are the files; INGRESS_FW and EGRESS_FW

I think @mrEEde has a point already in working out the teardown is really coming from the client side.