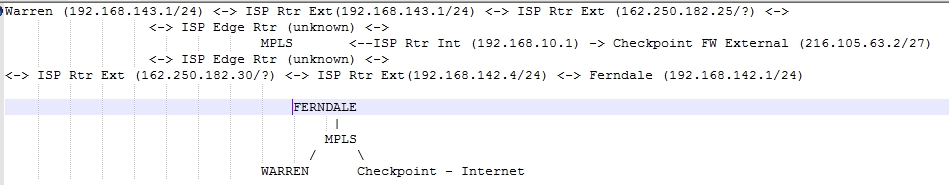

Sorry if this is a bit long. I have been losing sleep over it for 5 weeks now... I left on vacation for 2 days and over the weekend there was a power outage. None of the servers, routers, etc went down to my knowledge due to the UPS. We have two sites, Ferndale (main 192.168.142.x) and Warren(192.168.143.x)connected by a MPLS running at 20Meg. The firewall is housed at the ISP's location with an internal of 192.168.10.2. At the ferndale site sits the main Windows servers along with an iSeries (AS/400) running our ERP and the main mitel phone controller with the PRI. The warren location has another phone controller that links to the one in ferndale through the MPLS and uses VOIP. So Warren is basically a satellite with the users using 5250 emulation to talk to the iSeries (telnet). Starting the Monday I returned, the users began to be kicked out of their telnet and the phone system would drop communication between each other causing anyone on a call to lose it. Sometime this happens a few times in several hours, other times very frequently causing major problems with our shipping in warren. I started with various traces and ping tools and noticed packet loss. I contacted the ISP, they ran their ping test with 100 packets, said all was good on their end. We had replaced the switches a month earlier, so I began pulling them. 6 weeks later, the problem is no better. I ripped out the all of the switches and put the old ones back, replaced the cables, tested everything I could think of. During testing (straight into their router, I noticed I could only get 50% upload speed in Ferndale from FTP and couldn't run any of the speedtests sights at either location. Ferndale and Warren do NOT touch the firewall when speaking to each other. That is only for internet access. I called the ISP, they sent a tech who plugged into the router using their own network IP, going out their own internet gateway. They could run speedtest.net, it came back fine, so again, not their issue...its our firewall. The problem with that is 1) any XP/2003Server machine could run speedtest.net (yes, we tried many other speed sites without success). 2) OCCASIONALLY speedtest.net and megapath would complete on the other machines, but it was rare. It ALWAYS failed during the second half of the upload. 3) I configured my machine (Win10) to turn off auto-tuning in TCP and I can now run it most of the time. 4) The firewall should NO rejections for any of the machines running the tests. A firewall wouldn't do this if it was the cause. I have been running wireshark and its full of Dup Acks and retransmits. I have even sent them to the ISP. In https://www.dropbox.com/sh/8ujc1k86dektf2q/AAC1uir8CFKdixVOK88iKnysa?dl=0 you can find 3 packet captures, all run at the same time. One is for Ferndale, another Warren, and the third is the firewall. I am concerned with 3 machines, 192.168.142.7 (the AS/400), and 192.168.142.210 and 192.168.143.216 which are the phone controllers talking to each other. In you look at the Firewall capture, line 2116 and the Ferndale (line 46304), this is my machine .75 doing a speedtest. It doesn't appear the packets are all making it through the MPLS. The captures were done mirroring the ISP router ports and the entry into the firewall (Checkpoint). Can SOMEONE tell me what is going on. I have run out of equipment to replace and there have been no changes to the phones or iSeries in months.... asked 11 Aug '16, 10:50 jwiener edited 11 Aug '16, 11:25 showing 5 of 16 show 11 more comments |

This is a static archive of our old Q&A Site.

Please post any new questions and answers at ask.wireshark.org.

If I get you right, the MPLS connection is provided by the ISP so you cannot measure at the outer side of the MPLS routers. If so, instead of using speedtest.net for generation of traffic, I would use iperf during the calm part of the day (if any) so that little or no real traffic would interfere, running each of the two roles (traffic generator/traffic receiver) on another site and then swapping them. This way you would be able to identify whether just one of the directions is affected or both, and you would be sure the firewall is not involved (well, unless the routing between 192.168.143.0/24 and 192.168.142.0/24 is performed at the same hardware like the firewall functionality, but the capture from the firewall suggests otherwise).

If everything is allright with the MPLS interconnection, you should see a sustainable rate close to 20 Mbit/s for minutes in either direction (minus the real traffic of course). If you can see less, this is your proof to show to the ISP.

BTW, the capture from the firewall is puzzling me as the packets for both 192.168.142.0/24 and 192.168.143.0/24 are sent to one MAC address same for both subnets, while the packets from both 192.168.142.0/24 and 192.168.143.0/24 are coming from another MAC address, again the same one for both. Initially I wanted to be sure that there are no packets with one of the subnets as source and the other one as destination, which is true, but this MAC address difference suggests that there are two routers between those subnets and the firewall, one taking all the ingress traffic and the other one taking all the egress traffic. Maybe the connection between them is the bottleneck, as normally one of them is supposed to bear both directions and the other one to stay ready, and the interconnection between them wasn't intended to carry all traffic?

I will take a look at iperf today. I am more concerned with proving to them that there is something causing the re-transmits and dup-acks. Latency just spikes. There are several routers in the picture, but regretfully I can only see the internal side of 2 of them. I am fairly certain packets are being sent, but not making it to the other side. But since I can't see the external side of the ISP router, I can't prove it.

Hopefully its readable. Its tough to draw a star diagram in

That "something" is packet loss.

BTW, a bandwidth limitation is basically also a packet loss, except that it is an intentional (and expected) one, and you don't bump into it if your bandwidth demand is lower than the available bandwidth.

So proving them that the service they deliver provides a lower bandwidth than the contracted one should be enough for them to realize that something is wrong with their network. But if they demonstrate that the network is fine by ping, I am afraid they may be unable (or not willing) to understand that.

Some house rules of this site:

an Answer must answer the original Question. Your post clearly hasn't, so I've made it a Comment to the Question.

pictures in comments kill the page layout. Please provide the ASCII-art as your next comment, I'll format it the way the site understands it and replace the picture above with a text version of the same.

Where / How have you taken the traces? For example I would take a trace as close as possible to the WAN point in Ferndale.

The line is 20MB on both side. And I am capturing the lan side of the firewall and the internal sides of ISP router ports by mirroring the port to my port.

In the trace from the FW I can see a lot of "ACKed ubseen segment" from Ferndale site. So the packets arrived in Ferndale endpoint but not in the FW trace. Could there be a different path? Or maybe I missed something.

@Christian_R, it seems to me to be just an issue of the port mirroring at the switch - if you apply a display filter like

tcp.stream == 128 and (tcp.ack == 6532 or tcp.nxtseq == 6532), you'll see that the "ACKed unseen segment" packet has the same timestamp (to 1 μs) like the segment it ACKs but has been captured first.How are the duplex settings matching up.

Sounds like you may have a duplex mismatch. If you have full-duplex on one side and half-duplex on the other side, you will see a lot of dropped packets.

@wbenton yes that should be checked, I think it too.

@jwiener Do you see any uncommon packet drops at the switch interfaces?

@sindy yes that seems to be the answer to my finding... But why it is so significant in the FW trace?

heh, I haven't read your post carefully enough and thought you were talking about the Ferndale trace only... my explanation above is valid for Ferndale, but you are right that in the FW trace there are cases where some ACKed data are really missing in the capture. So here a duplex mismatch, low speed on the monitoring port as compared to the captured data, or capture loss could be an explanation if the trace has been taken using port mirroring on a hardware switch. But the unusual MAC addresses like

00:00:00:00:00:10and06:00:00:00:00:10seen in the capture, as well as occurrence of four mutually similar addresses,69:31:6c:61:6e:00 4f:62:77:61:6e:00 4f:62:6c:61:6e:00 69:31:77:61:6e:00suggest that the capture has been taken in some virtualized environment.I would look for duplex mismatch somewhere along the line.

If one side is half duplex while the other is full duplex, you will see numerous packet collisions/drops and thus retransmits will occur as well.

Look at per port collisions for all ports on both switches and routers to see if you can find the culprit.

FWIW

I agree, but for some reason the ISP is refusing to change the duplex. Right now it is set to auto on both sides. I have asked for it to be hardcoded to 100 half. I see no errors or collisions on the switches at either site.

Why do you want to set it at 100 half??? Have you checked every switch or only the border switches?

Why 100 half in particular? Anyway, the speed and duplex negotiation may be a nightmare between different vendors' equipment, but if you have auto/auto and the ports of your switches connected to the ISP's routers report neither errors nor collisions, these settings at the edge between you and the ISP are not the cause of the packet drops. This does not mean that it cannot be the reason somewhere inside their network as you've mentioned a power outage. The speed&duplex negotiation may have failed somewhere, resulting in full duplex mode on one end of the wire and half duplex on the other. But from outside, nothing more can be discovered than just packets being dropped or not.

Can you say something about the firewall site and how exactly the capture is done there? Because the MAC addresses are weird, and even more important, the absence in the capture of frames which must have been transmitted because they have been acknowledged is weird. Maybe there is some kind of port aggregation and some packets (precisely, frames) take another path than the one you capture?

But still, as you say that you have issues between Ferndale and Warren alone where the firewall isn't in the path, this should not be the main path of investigation. Have you got anywhere with the iperf? The point is that when you use speedtest.net as a traffic generator, the firewall becomes involved, so it is hard to prove the ISP guys that the firewall is not the cause of the trouble.

@sindy I agree.