I'm having here an issue with an Application Delivery Controller (ADC) were the vendor and I can't agree on the reason for the issue. Setup:

Problem:

Notes:

Questions:

Side note:

asked 27 Apr '17, 07:30 Uli |

3 Answers:

Yes in your case I would tune the minRTO to 300ms, as 200ms RTT is not uncommon but nowadays it is no low RTT and we must avoid to go into congestion avoidanvce mode when there is no real packet loss. How often do you see a RTO? The most common retransmission is a Fast Retransmission, which stays untouched. answered 11 May '17, 14:17 Christian_R |

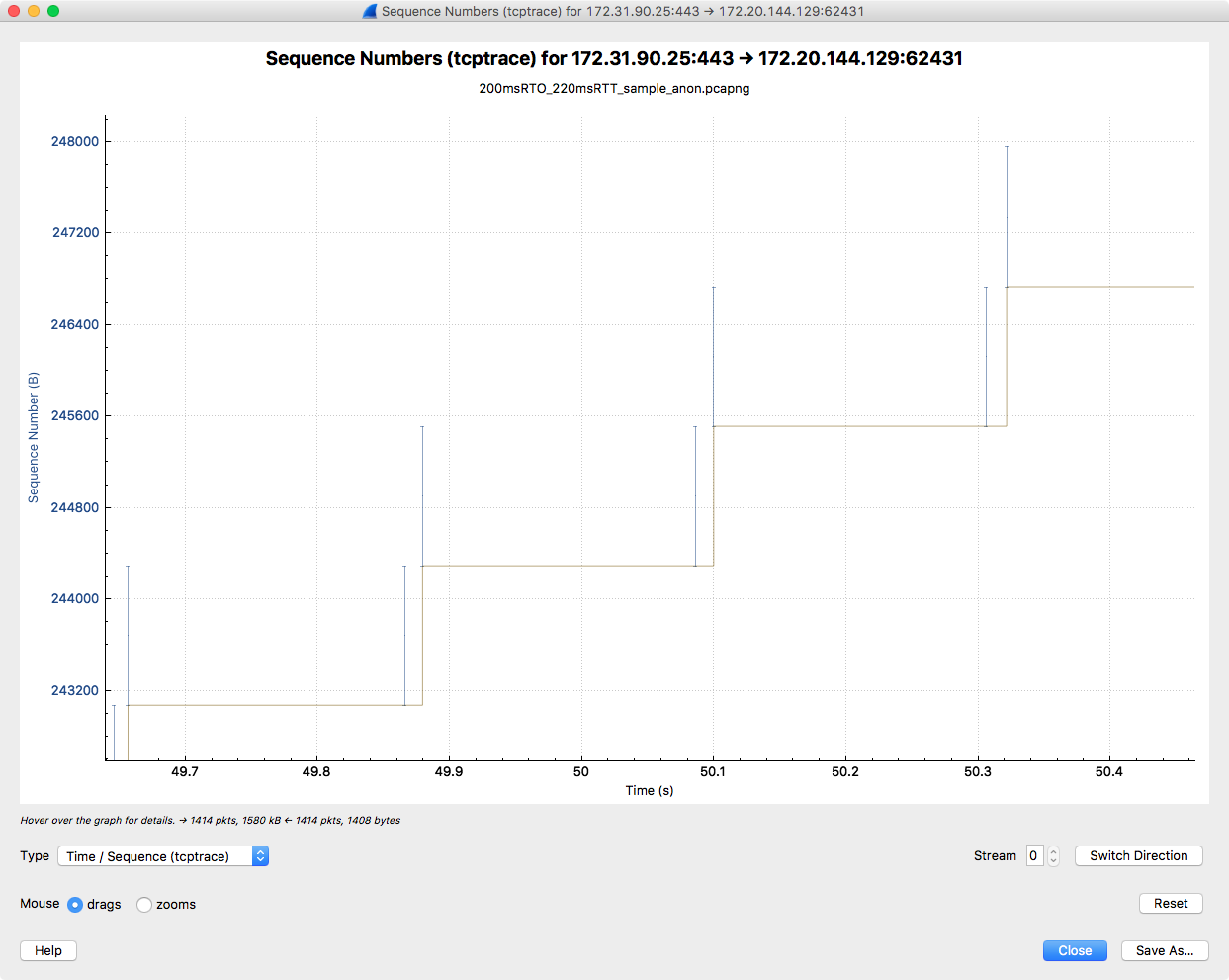

From a brief look at the Time Sequence Graph in your capture file this looks very much like a Nagle Algorithm + Delayed ACK issue, which is described nicely here. You might want to try turning off these options at least on the client and see if that helps. answered 02 May '17, 08:51 djdawson |

I suspect the Nagle/Delayed ACK issue on the client, not the server. If you look at the following screenshots of the Time Sequence graph of the Server-to-Client traffic you can clearly see the client waiting for each packet for just over 200 msec before sending the ACK for the retransmitted packet, which was probably sent as a result of the RTO at the server. Since Nagel and Delayed ACK are not negotiated between TCP endpoints, it would be necessary to disable these options on the client in order to verify if that's the issue.

answered 02 May '17, 16:45 djdawson edited 04 Jan '19, 10:47 cmaynard ♦♦ |

Thanks for taking a look.

It's not a Nagle/Delayed Ack issue. RTT is ~240 ms. ADC (server) retransmits data already after 205 ms. The configured TCP profile (Server side) has Delayed ACK and Nagle disabled.

The client ACKs data after two packets (or after a PSH).

Thanks again for taking another look.

The client was located in Bangkok/Thailand and the server in Bavaria/Germany. The latency between these two location is approx. 100-120 ms (=> Round Trip Time ~220-240 ms).

The capture was taken at the server site.

In the screenshot @49.88 the server transmits data, @50.08 the server retransmits the data. The ACK from the client arrives @50.10. Due to the round trip time this is the ACK for the inital data transmit.

Therefore my inital question about calculating RTO at server site.

You're right - I was confused about where the capture was taken, and agree that this would be a server issue. Sorry for any confusion I may have caused.

Getting back to your original questions, I agree that it probably shouldn't be necessary to configure a minimum RTO, since TCP will compute it's own, and I also agree that it could cause problems for clients with small RTO. I also think it seems reasonable that setting a minRTO shouldn't affect the RTO calculation, but it also wouldn't surprise me if it did, either because of a bug or some other issue in the particular TCP stack. It would seem useful to see how things perform with TCP using its default settings, since the RTO behavior of the server certainly doesn't look right.

Your "answer" has been converted to a comment as that's how this site works. Please read the FAQ for more information.

I'm also trying to move the other "answer" to a comment on this answer but the Ask site seems a bit broken in this respect at the moment.

When I configure a greater minRTO (e.g. 400 ms) the connection with a 205 ms RTT works smooth. cwnd increments normal.

Configuring no minRTO is not possible on the ADC. There have to be one.